|

| leading the way to the

new storage frontier | |

SSD aspects of Diablo's Memory1 and DMXby

Zsolt Kerekes,

editor - StorageSearch.com

- August 2, 2016 |

| .. |

| For a year before writing this story I

had been wondering about the

flash controller

aspects of Diablo's Memory1. I learned the missing pieces in some

conversations I had with the company and I tagged that hitherto little known

information to the back end of a funding news story. For me - it was this

architecture which was the real story. But as with so much

SSD news the details soon

got lost in the archive.

I've extracted this story here so that if you're curious about how a modest

DWPD flash SSD can be

good enough to emulate DRAM

- you'll see how it's done. | |

| .. |

Diablo gets more funding for Memory1 and DMX

software

Editor:- August 2, 2016 - Diablo Technologies

today

announced

it has secured $37 million across 2 phases of an oversubscribed round of Series

C financing.

New investors Genesis Capital and GII Tech Ventures

joined the second phase of the round, along with follow-on investments from

Battery Ventures, BDC Capital, Celtic House Venture Partners, Hasso Plattner

Ventures and ICV.

Editor's comments:- that's kind of

interesting - but much more interesting from my perspective was what I learned

in a 1 hour conversation with the company last week about the software for their

Memory1 (flash as RAM) product.

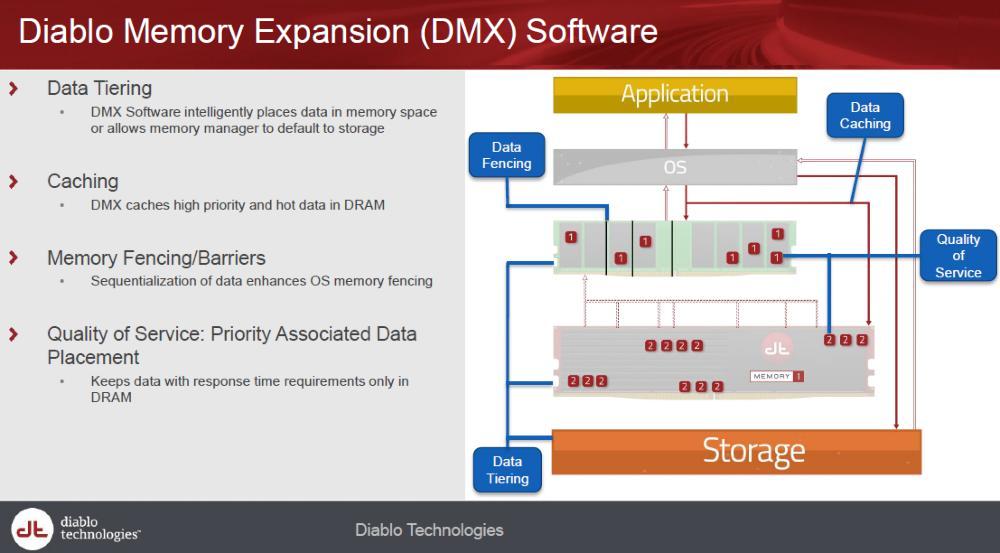

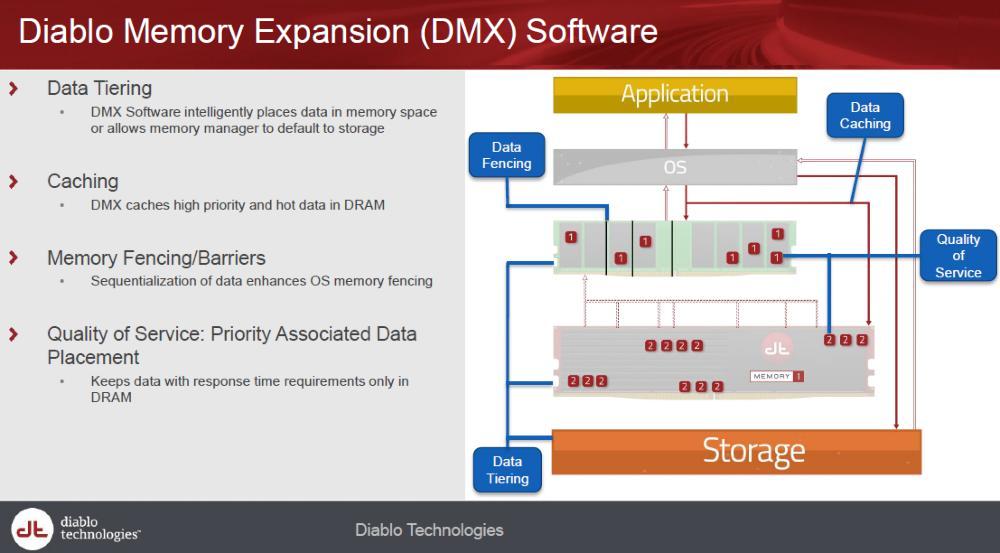

Diablo's DMX software is barely

mentioned in the funding PR above. I expected to see more on their web site

about this.

I also learned how Diablo handles the flash and endurance

issues.

Those aspects were mysterious to me when the product was

announced last year. But it's very straightforward.

The best way to

think about the caching and tiering side of things is that Diablo's software

leverages DRAM on the motherboard.

This DRAM (in another socket) must be present for every 1 or 2

Memory1 modules in the system. And in many respects it uses that DRAM and its

own flash in a similar way

to the early Fusion-io

PCIe SSDs and some of the other tiering, caching products we've seen before like

FlashSoft.

Diablo's DMX operates in memory layers and also the company has done machine

learning of popular and proprietary apps it might work with so that it

understands the nature of data demand patterns and structures.

Diablo

says that - unlike NVMe and those other

storage cache / tiering

products - the benchmarks they've done with Memory1 have much more

acceptable operation - because DMX and Memory1 don't have the same variability

of latency which occurs when you go through storage stacks and storage or

network interfaces.

This inconsistency of latency is one of the

problems I wrote about in my classic article on

SSD design

symmetries. And the consistency of - for example -

random IOPS

- was a powerful competitive difference exploited by marketers at enterprise

PCIe SSD pioneer - Virident.

There are always infrequent traffic related congestion and contention

problems in any multi-tiered latency system - even in real world physical

DRAM

controllers.

These rare clogging events (nanoseconds,

microseconds, or milliseconds) accumulate to bigger actual latency numbers in

storage interchanges. So when you're emulating memory with storage - it's not

the best performance which matters. It's the worst case which causes QoS

problems.

Diablo says the ability of DMX to understand data at the

application level and move it between DRAM and flash via the DRAM bus with

native custom silicon

controller support for

these memory movements - gets results which are on average several times better

than the best average PCIe based flash cache alternatives. As you'd expect.

But it's the superiority of the worst case latencies - which can prove

to be the yea or nay breaking point in the selction between DIMM based

flash and other interfaces for critical memory emulation deployments.

DMX

includes a QoS latency feature so that application developers can retain

control of data they like in DRAM without having to rely on caching

intelligence.

Memory1 - from the flash endurance view

The

endurance side of Diablo's Memory1 architecture with DMX software is amazing

straightforward and conventional.

If you think of the "flash SSD"

as being the combination of the flash in the Memory1 DIMM and the compulsory

server DRAM DIMM (on the same server motherboard) then from the flash

SSD controller point

of view the whole system operates adequately with much lower

DWPD requirements than

you'd expect from a memory intensive system.

How can an OK DWPD

flash SSD be good enough to be a DRAM emulator?

There are several

ingredients in the IP juice which - when factored together - greatly reduce

the endurance

loadings on the flash.

- the SSD has a relatively high

RAM to flash cash

ratio compared to most SSDs (because it can use as much if the DRAM as it

needs)

- all writes to the flash array are done as big sequential writes which

since the earliest days of enterprise flash has been demonstrated to improve

reliability and performance.

- write

amplification can be minimized more so than in the best (storage focused)

SSDs because the DMX software understands popular applications and has more

visibility of the memory space and storage space than a typical enterprise SSD.

DMX has control of fetches and lookahead requests from the flash

space and is controlling the memory spaces too. The data locality mechanisms

which assist efficient cachin also reduce stress on endurance too.

Diablo

calls this "intelligent traffic management". This avoids premature

writes to flash for frequently written pages and minimizes read / modfy / write

operations performed on data while it is resident in the flash.

- and finally - when you look at whole DRAM contents - the rate of change of

data in most general purpose enterprise servers (as a percentage of the whole

physical memory capacity) is much less than you might think.

That's

because system software has evolved over many years to keep critical stuff in

memory. You can see more about the DRAM side of queues and caches and DRAM

controller latencies in

this article

here. It's this legacy of virtual memory (memory being the combination of

HDD and DRAM) which is the underlying market enabler of DIMM wars memory

tiering products to deliver cost and power benefits. In Memory1 it's

not the flash or the controllers or the interface or any single aspect of the

design which make this a significant solution which needs to be assessed by big

memory users - it's the integrated systems level operation.

This is

a big

controller architecture approach which integrates chips and its own

flavor of SSDeverywhere

aware software to grab visibility and manage control over many different

elements of the applications delivery experience. | |

| . |

Related articles

where are we

heading with memory intensive systems?

August 2015 - Diablo

launches Memory1 (volatile flash replacing DRAM)

Retiring and retiering enterprise DRAM was one of the

big SSD ideas

in 2015.

What were

the big SSD, storage and memory architecture ideas which clarified in 2016? |

| . |

| |