|

|

|

this way to

the petabyte SSD - 2016 to 2020 roadmaps

In

which the author explains why he thinks users will

replace their

hard drives with

SSDs in the last bastions

of the datacenter (the cost sensitive utility storage archive) even if the

cost of terabyte hard drives

drops to zero...

And publishes the business plan for a new industry in the

SSD market.

Now's a good time to get that coffee (and the headache pills).

This

article maps my vision of the steps needed for the storage market to

deliver affordable 2U rackmount

SSDs with a PB capacity (1,000 TB) in the soonest possible time using

evolutionary steps in

chip technology but calling

for a revolutionary change in

storage architecture.

how does this article fit in with earlier and later SSD market

model articles?

It can be read on its own - or viewed as a sequel

to 2 preceding articles:-

- SSD

market adoption model (2005 edition) - in which I described why users would

buy SSDs. This analyzed the user value propositions for the 4 main markets in

which SSDs would be adopted in the decade following publication.

- How solid

is hard disk's future? (2007) - in which I explained that the growth of the

SSD market wouldn't result in any sizable reductions in overall hard disk

market revenue till about 2011 - because the HDD market was itself gaining

revenue from new consumer markets such as video recorders almost as fast as it

was losing revenue to SSDs in

notebooks

and embedded markets.

This Petabyte SSD article also forms part of

the narrative in the (2012) article -

an introduction to

enterprise SSD silos (7 ways to classify where all SSDs will fit in the

pure SSD datacenter).

this way to the petabyte SSD

This article

previews the

final chapter in the

SSD vs HDD wars.

The elimination of hard drives from what is currently (2010) its

strongest bastion - the bulk data storage market in the data center.

That's

been a cost sensitive market in which the cost per terabyte arguments (which I

showed were irrelevant in 4 out of the 5 cases in the

SSD market

penetration model) have been very relevant in this 5th and final case.

Media / capacity cost has been a protective shield to hard disk makers.

This

new article will show how that last remaining obstacle in the SSD

domination roadmap will be removed.

It will describe new user

value propositions (which were not in my

earlier SSD

market adoption models) whereby the transition to solid state storage

takes place irrespective of whether

SSDs

intersect with the magnetic cost per terabyte curve. It describes

the market climate in which enterprise users will not find hard drives

attractive or usable - even if the cost of buying a complete new HDD drops

away to nothing.

This is a much more complicated market to model than

you might guess (even for an experienced

SSD analyst like

myself) for these reasons:-

- MLC flash SSDs

(the best looking horses in the theoretical race to achieve HDD and SSD

price parity) will not play a significant part in dislodging hard drives from

their use in archives for reasons explained later in this article connected with

data integrity.

Later note added in 2012 - I was wrong about this. But

the fact that other nand flash can do fast-boot reliably - doesn't change the

conclusions in the article. The use of

adaptive R/W

DSP in MLC flash will enable TLC / QLC to play the same market

replacement role which I originally anticipated would require mainly powered

down SLC with fast boot. But apart from this memory type difference - the market

impact and architecture assumptions below remain unchanged.

- New emerging applications and market conditions (in the 2011 to 2019

decade) will put much greater stress on archived data.

These new

search-engine centric applications will accelerate the growth of data

capacity (by creating automatically generated data flow patterns akin to

ant-virus strings matching - which will speed up the anticipation and

delivery of appropriate customer data services).

At the same time

these new apps will demand greater IOPS access into regions of storage which

have hitherto been regarded as offline or nearline data. Those factors would -

on their own - simply increase storage costs beyond a level which most

enterprises can afford.

- The ability to leverage the data harvest will create new added value

opportunities in the biggest data use markets - which means that

backup will no longer

be seen as an overhead cost. Instead archived data will be seen as a potential

money making resource or profit center. Following the

Google

experience - that analyzing more data makes the product derived from that

data even better. So more data is good rather than bad. (Even if it's

expensive.)

- Physical space and electrical power will continue playing their part as

pressure constraining corsets on these systems. That's why new SSD architectures

will be needed - because current designs are incapable of being scaled into

these tight spaces.

Business plan for a new SSD market

In

some ways I feel like any product manager launching any new product - nervous

and hopeful that it will get a good reception. The product in this case -

includes the idea of an entirely new class of SSDs.

By sharing this

vision I'm throwing down the gauntlet to product managers in the

SSD,

hard disk,

D2d and

tape library markets and

saying - "Look guys - you can do this. All the technology steps are

incremental from where we are today... just start working on your aspect of the

jigsaw puzzle. Because if you don't figure out which place you want to occupy in

this new market (circa 2016) - then you will be unprepared for the market when

it arrives - and won't have a business."

Because the audience for

this article is technologists, product managers, senior management in storage

companies and (as ever) founders of storage start ups... I'm not going to

clutter up this article with things you already should know - or can easily

find out - by reading any of the hundreds of other SSD related articles I've

already published here in the past 11 years. Instead I'm going for a leaner

style. |

|

The 4 propositions discussed in this article are:-

- what kind of animal will the PB SSD be?

- who's going to buy it - and why?

- where will it fit in the datacenter storage architecture?

- what are the technical problems which need to be solved?

- looking back at the last bastions of magnetic storage

The easiest

way to demonstrate the first 2 points is to roll forwards in time to the

fictional launch press release for this type of product. |

Stealth mode startup wakes

petabyte SSD appliance market

Editor:- October 17, 2016 - Exabyte SSD Appliance emerged from stealth

mode and today announced a $400 million series C

funding round and

immediate availability of its new Paranoid S3B series - a 2U entry level

Solid State Backup appliance

with 1PB (uncompressed) capacity.

Sustainable sequential R/W speeds

are 12GB/s, random performance is 400K IOPS (MB blocks). Latency is 10

microseconds (for accesses to awake blocks) and 20 milli-seconds (for data

accesses to blocks in sleep mode.)

The scalable system can deliver 20PB

of uncompressed (and RAID protected) nearline storage in a 40U cabinet - which

can be realistically compressed to emulate 100PB of rotating hard disk storage

using less than 5kW of electric power.

Preconfigured personality

modules include:- VTL / RAID emulation (Fujitsu, HP, IBM and HP), wire-speed

dedupe, wire

speed compression / decompression and customer specific

encryption. Exabyte

SSD also offers fast-purge

as additional cost options for Federal customers or enterprises, like banks,

whose data may be at higher risk from terrorist attacks. Pricing starts from

$100,000 for a single PB unit with 4x

Infiniband /

FC or 2x

Fusion-io compatible

SSD ports. The company is seeking parterships with data

migration

service companies.

Editor's comments:-the "holy grail" for

SSD bulk archives is to be able to replicate and replenish the entire

enterprise data set daily - while also coping with the 24x7 demands of

ediscovery, satellite office

data recovery,

datacenter server rebuilds and the marketing

department's heavy loads (arising from the new generation of

Google API inspired

CRM

data population software toys.) The Paranoid S3B hasn't quite achieved that

lofty goal - with the current level of quoted performance (because in my

opinion the proportion of "static data" - mentioned in the full

text of the press release is much higher than is found in most corporations).

Despite those misgivings the Paranoid S3B is the closest thing in the market

to the idealized SSD bulk archive library as set out in my

2012 article.

Internally the Paranoid uses the recently announced 50TB

SiliconLibrary (physically fat but architecturally

skinny) SLC

flash SSDs from

WD - instead of

the faster (but lower capacity) 2.5" so called "bulk archive"

SSDs marketed by competing vendors. In reality many of those wannabe SSD archive

SSDs are simply remarketed consumer video SSDs.

Exabyte SSD's

president Serge Akhmatova told me - "...Sure you might use some of those

other solutions on the market today if you only need to buy a few boxes and can

fit all your data in a handful of Petabytes. Good luck to you. That's not our

market. We're going for the customers who need to buy hundreds of boxes. Where

are customers going to find the rackspace if they're using those old style,

always-on SSDs? And let's not forget the electrical power. Our systems take 50x

less electrical power - and are truly scalable to exabyte libraries. When you

look at the reliability

of the always-on SSDs it reminds me about the bad old days of the hard disk

drives - when you had to

change

all the disks every few years."

The recently formed

SSD Library Alliance is

working on standards related to this class of SSD products - and will publish

its own guidelines next year. I asked Exabyte SSD's president - was he worried

that Google might launch its own similar product - because they were likely to

be the biggest worldwide user for this type of system.

"I

can't speculate on what Google might do in the future" said Serge

Akhmatova "we signed NDAs with our beta customers. But it does say in

our press release that the new boxes are 100% compatible with Google

APIs. We worked very closely together to make absolutely sure it works

perfectly. You draw your own conclusions."

Editor (again):-

students of

SSD market

history may recall that one of the early pioneers in the SSD dedupe

appliance market was WhipTail

Technologies (who launched their 1st product in February

2009).... | |

|

where will

it fit in the datacenter storage architecture?

In an earlier

article -

Why I Tire of "Tier

Zero Storage" - I explained why I think that numbered storage tiers -

applied to SSDs is a ridiculous idea - and I still hold to the view that SSD

tiers are relativistic (to the application) rather than absolute.

But

where does the Paranoid PB SSD appliance fit in?

In my view there are 3

distinct tiers in the SSD datacenter.

- acceleration SSD - close to the application server - as a DAS

connection in the same or adjacent racks (via

PCIe,

SATA,

SAS etc.)

- auxilary acceleration SSD - on the

SAN /

NAS.

- bulk storage archive SSD - whose primary purpose is affordable

bulk storage which is accessible mainly to SAN / NAS - but in some data farms -

may connect directly to the fastest servers.

Some of these tiers

will have sub-tiers - because of the realities of market economics. But only 7

different types of SSDs are needed to sustainably satisfy all the architecture

needs in the pure solid state storage data center. The Petabyte SSD rack is 1 of

those 7. You can read about the rest in my article -

an introduction to

enterprise SSD silos.

technical problems which need to be solved

...introducing a

new species of storage device - the bulk archive SSD

This

is a very strange

storage animal

which - although internally uses nv memory such as flash - and externally looks

like a fat 2.5" SSD

- looks as alien architecturally to a conventional notebook or server SSD as

does a tape library to a hard drive. The differences come from the need to

manage data accesses in a way which

optimize power use

(and avoids the SSD melting) rather than optimizing the performance of data

accesses.

The requirements of the

controllers for

SSDs in bulk store applications differs from those in today's SSDs (2010) in

these important respects:-

- optimzation of electric power - the need to power manage memory blocks

within the SSD so that at any point in time 98% are in sleep mode (in the

powered off state). I'm assuming that the controller itself is always in the

mostly awake state.

- architecture - internally each SSD controller is managing perhaps 20 to 50

independently power sequenceable SSDs. In this respect the 2.5" SSD

architecture resembles some aspects of a mini auto MAID system.

- endurance

- data writes to the library chips are nearly always in large sequential blocks

(because it's a bulk storage appliance) therefore

write amplification

effects are a lesser concern than with conventional SSDs. Also the main memory

is SLC - not MLC due to the need for data integrity. That's partly because the

thousands of power cycles which occur during the life of the product - which can

be triggered by reads (not just by writes) would lead to too many disturb

errors - and also because the logic error bands in MLC thresholds are too

small to cope with the electrical noise in these systems.

...Later:-

I was wrong about the need for these bulk storage SSDs to be built around SLC. A

few years after writing this article developments in

adaptive SSD controller design and DSP

- showed that MLC, TLC (x3) and even QLC (x4) can be

managed to produce

adequate operating life and data integrity.

- power up / power down - for the controller this is a different environment

than a notebook

or conventional server

acceleration SSD. It lives in a datacenter rack with a short term battery

hold-up and in no other type of location - ever. The power cycling must be

optimized to reduce the time taken for the 1st data accesses from the sleep

state. (Fast boot to ready SSDs.)

- performance - R/W throughput and

IOPS are

secondary considerations for this type of device and likely to lag 2 to 3 years

behind the best specs seen for other types of rackmount SSDs. In the awake state

sequential R/W throughput - for large data blocks - has to be compatible with

rewriting the whole SSD memory space in approx 24 hours or less.

The

design of the archive SSD presents many difficult challenges for designers and

I'm not going to understate them. But in my view they are solvable - given the

right economic market for this type of product and overall feature benefits.

Here are some things to think about.

- refresh cycle - you know about

refresh

cycles in DRAMs - why

need one for

flash? The answer is that

seldom accessed data inside the SiliconLibrary could spend years in the

unpowered (or powered but static data) state. That would be a bad thing -

because the data retention of the memory block can decline in certain

conditions

increasing

the risk of data loss. So to guarantee integrity in the SSD is a

house-keeping task which ensures that ALL memory blocks in the SSD are powered

up and refreshed at regular intervals - maybe once every 3 months - for example.

If you're familiar with tape library management - think of it as "spooling

the tape."

- surges and

ground

bounce in the SSD. If uncontrolled - these could be a real threat to data

integrity. That's one of the reasons why the wake access time has been

specificied as a number like 20 milliseconds - instead of an arbitrary number

like 200 micro-seconds. Soft starting the power up (by current limiting and

shaping the slope) will reduce noise spikes in adjecent powered SSDs and also

minimize disturb

errors.

- awake duty cycle. I haven't said much about the nature of the duty cycle

for the powered up (awake) state for memory blocks. To achieve good power

efficiency I've assumed that in the long term this will be just a few per cent

of the time. But how will it look on any given day? That depends on the HSM

scheme used in the SSD library. My working assumption is that once an SSD block

is woken - it stays in use for a period ranging from seconds to minutes. The

controller which woke it needs to have a way of anticipating this. As the SSD

library is the slowest tier in the SSD storage world - it would be reasonable to

assume that the device which originated the request (an online SSD) can do some

buffering and pack or unpack data requests into multiple GB chunks. Something

for the "hello

world!" members of the design team to think about.

looking

back at the last bastions of magnetic storage

I published my long

term projections for HDD revenue in an earlier article -

Storage Market Outlook

2010 to 2015.

The hard disk market doesn't have to worry about an

imminent threat from bulk storage SSDs for many years.

Instead I think

the concept opens the door to a fresh opportunity for companies in the

storage market to re-evaluate themselves.

If they believe the PB SSD

will be coming (and the details may be different to the way I have suggsted)

where will their own companies fit in?

I've also started to discuss

this idea with software companies too. Because the new concept open up entirely

new ways of thinking about backed up data. What is it for? What can you do with

it? How can you grow new business tools from content which upto now - was just

out of site and out of mind?

There are many exciting challenges for the

market ahead.

(And many mistakes too in the initial draft of this

article - which I'll pick up and deal with later.)

Thanks for taking

the time to read this. If you like it please let other people know. |

|

Key ideas to take away

from this article

- More data is better - not worse. Data volumes wll expand due to new

intelligence driven apps. But the data archive will be seen as a profit center -

instead of a cost overhead.

- the "SSD revolution" didn't end in

2007. It will

not stop soon - and instead will factionalize into SSD civil wars. Some of these

will overlap - but many won't.

- True archive SSDs using switched power management may be able to pay for

themselves by saving on electrical costs, disk replacement and datacenter space

- even if the competing hard drives are free. But it will be impossible for

hard drives to deliver the application performance needed in the petabyte

ediscovery and Google API environment anyway.

- Petabyte SSD systems - used for

cloud and

archive applications would

benefit from fast boot SSDs (with low standby power) but don't need fast

R/W performance at the single drive level. When originally writing this article

in early 2010 - I thought the fast boot and data integrity could only be met by

SLC - but now it looks like

adaptive R/W

technology coupled with developments in the

consumer market -

such as DEVSLP could provide these functions more cheaply.

- There will be no

room in the datacenter for rotating storage of any type. It will be 100% SSD

- with just 3 types of distinct SSD products.

| |

|

Footnotes about the companies mentioned in this article

Some

of the companies mentioned in the "fictional" part of this article -

to illustrate the 2016 press release - are real companies. Thiese are the

reasons why I chose the companies whose names I used to illustrate certain

concepts. And I hope they won't be offended.

- WD Solid State

Storage (SiliconLibrary) - WD's SSD business unit has been intensively

testing SSD data integrity by running individual SSDs through thousands of

power cycles - since 2005 - as part of verifying its

PowerArmor

data protection architecture. These are the longest running such test programs

I know - and they have already harvested data from millions of hours of device

tests (by 2010).

Understanding what happens to MLC SSDs when subjected

to these stresses is a key factor in the confidence to design bulk storage SSDs

- in which each memory block may undergo upwards of 5,000 power cycles in its

operating life.

Although many other industrial flash SSD companies

also have experience in this area - WD also has experience with designing hard

disks which have good power performance in sleep mode. These features have not

been widely deployed in MAID systems because of the long wake access time. Will

the 20mS wake time - which I've proposed for archive SSD prevent its acceptance?

We will see.

- Fusion-io (SSD

port) - although SSDs currently use standard interfaces within and between racks

- I speculate that in some markets there will be a cost / performance advantage

to creating a new proprietary interface to "get the job done." I'm

proposing that Fusion-io - already the best known brand in the

PCIe SSD market (2010)

- is a likely company to adapt its products for new markets when the need

arises - and create a new defacto industry standard for inter-rack SSD ports.

- Google - It's not

unreasonable to expect that in the 6 years following publication of this article

Google (who already markets

search appliances,

is the #1 search company,

and is working on an SSD based OS

for notebooks) will be setting many key standards for the manipulation of large

data sets within the enterprise. Google APIs will be as important to CIOs in

the future as Oracle and other SQL compatible databases have been in the past

as tools which support data driven businesses.

| |

|

| When 1,000 Terrorbytes

gather - it's spooky. | |

| .. |

"Business thinking will change - towards valuing more data. But

physical space and electrical power will continue playing their part as pressure

constraining corsets on these systems. That's why new SSD architectures will be

needed - because current designs are incapable of being scaled into these tight

spaces" |

|

|

|

| .. |

what's

RAM really?

the

changing state of DWPD

after AFAs -

what's the next box?

|

| . |

| Petabyte

SSD Milestones from

Storage History |

Viking ships 50TB SAS SSDs

Editor:-

July 11, 2017 - Viking

began shipping dual port 50TB 3.5" SAS SSDs for the enterprsie flash

array market. The new capacity optimized drives use 2D flash and use enterprise

array management technology licensed from AFA maker Nimbus. See

sauce for the

SSD box gander for an interview article which explains this

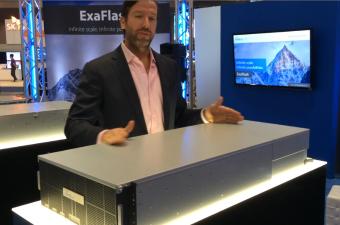

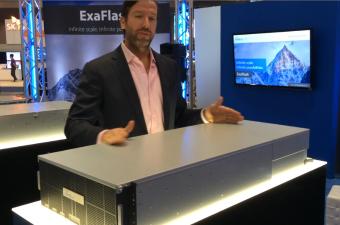

Nimbus re-emerges from stealth with 1PB / U raw HA SSD

Editor:-

August 9, 2016 - Nimbus

Data Systems has emerged from its self imposed exit into

marcomms stealth

mode with the

announcement

of a new range of Ethernet/FC/Infiniband attached rackmount SSDs based

on its new

ExaFlash

OS with GA in Q4 2016.

Entry level products start in a 2U box with

50TB raw capacity for under $50K and for larger configurations Nimbus says its

ExaFlash offers an effective price point as low as

$0.19 per

effective gigabyte (including all software and hardware).

Higher

density boxes in this product line - D-series models - will have 4.5 PB raw

capacity in 4U (12 PB effective).

Re the

architecture

- I haven't seen details - Nimbus says there is no data network between the

storage arrays themselves, guaranteeing that performance truly scales in

lock-step with capacity and with consistent latency.

video above shows the 4PB raw ExaFlash

at FMS

Editor's comments:- if there are to be sustainable

roles in the future

consolidated

enterprise SSD systems market for AFA vendors which previously sold arrays

of SAS/SATA SSDs - and who don't own their own semiconductor fabs - the only

viable ways to establish such platform brand identities are with SSD

software and architecture.

There's a huge gap between the technological

aspiration which Nimbus talks about and the weakness of its past marketing and

the kind of

funding which we've

seen competitors in this market burn through in the past with mixed results.

In the next few quarters I hope we'll hear more from Nimbus about its

business development plans and customer adoption.

See also:-

roadmap to the

Petabyte SSD,

the unreal

positioning of many flash array "startups"

enterprise SSD petabytes doubled in China in 2015

Editor:-

March 15, 2016 - Gregory

Wong, President,

Forward

Insights said that Enterprise SSD petabytes doubled YoY in the China

market in 2015.

Caringo gets patent for adaptive power conservation in SDS pools

Editor:-

May 19, 2015 -

Caringo today

announced

it has obtained a US patent for adaptive power conservation in storage

clusters. The patented technology underpins its Darkive storage management

service which (since its

introduction

in 2010) actively manages the electrical power load of its server based

storage pools according to anticipated needs.

HDS has shipped 19PB of enterprise flash in past quarter

Editor:-

May 9, 2014 - A recent blog - in ArchitectingIT

- says that 3 leading vendors shipped a sum total of over 50PB of

rackmount SSDs in

Q1 2014 - with HDS

- apparently having shipped more flash capacity than either EMC or Pure Storage

according to estimates by the blog's author Chris Evans.

NetApp has shipped 59PB of SSDs in past 3 years

Editor:-

November 19, 2013 - Among other things - Network Appliance

today

disclosed

it has has shipped over 59 petabytes of flash storage in the past 3 years.

Editor's

comments:- What NetApp actually said was "over 60PB to date".

My calculation goes like this... The company shipped 1PB in its first

year in the SSD market - which ended in the 3rd quarter of

2010. So

it's shipped approximately 60PB in 3 years. Probably more than 1/2 of that will

have been in the past year.

How does that compare with others? It

doesn't sound like a lot in the context of today's market.

According

to a

blog

by Toshiba - the

analyst data which

they have

aggregated

projects that 8,000 PB of enterprise flash SSDs will ship in 2014.

Skyera will ship half petabyte 1U SSD in 2014

Editor:-

August 13, 2013 - Skyera

today

launched

the next version of its

rackmount SSDs -

the skyEagle - which will ship in the first half of 2014 - offering 500TB

uncompressed (2.5TB deduped and compressed) in a 1U form factor at a record

breaking list price

expected to be under $2,000 per uncompressed terabyte.

Nimbus ships petabyte SSDs / month

Editor:- January

22, 2013 -

Nimbus Data Systems

today announced

it has been shipping at the rate of over 1

petabyte of SSD

storage / month.

WhipTail shipped over 3 petabytes SSD storage in 2012

Editor:-

January 17, 2013 -

WhipTail

recently

announced

that its revenue grew 300% in

2012 and that

it has shipped a total of over 3.7 petabytes of SSD storage

Nimbus HALO OS supports 0.5 Petabyte SSD in a single SSD file

system

In January 2012 -

Nimbus

announced

its entry into the

high availability

enterprise SSD market with the uveiling of the company's -

E-Class systems -

which are 2U rackmount SSDs with 10TB

eMLC per U of

usable

capacity and no single point of failure.

Nimbus software (which

supports upto 0.5

petabytes in a

single SSD file system) automatically detects controller and path failures,

providing non-disruptive failover.Pricing starts at $150K approx for a 10TB dual

configuration system.

0.4 Petabyte SLC SSD feasible in a single cabinet

In

December 2011 - Texas Memory

Systems

announced

imminent availability of the

RamSan-720

- a 4 port (FC/IB) 1U

rackmount SSD

which provides 10TB of usable 2D (FPGA implemented)

RAID protected and hot

swappable - SLC

capacity with 100/25 microseconds R/W latency (with all protections in

place) delivering 400K IOPS (4KB), 5GB/s throughput - with no single point of

failure (at $20K/TB approx list).

The high density and low power

consumption of this SSD made it feasible to stuff over 400TB of usable SSD

capacity into a single cabinet

without fear of over

heating.

Hybrid Memory Cubes tick boxes for feasibility of Petabyte SSDs

In October 2011 - Samsung and

Micron

launched a new industry

initiative - the Hybrid

Memory Cube Consortium - to standardize a new module architecture

for memory chips - enabling greater density, faster bandwidth and lower power.

HMC will enable SSD designers to pack 10x more

RAM capacity into the same

space with upto 15x the bandwidth, while using 1/3 the power due

to its integrated power management plane. The same technology will enable

denser flash SSDs too.

university researchers compare SSD and tape archives

In

- October 2011

a white paper -

Using Storage Class

Memory for Archives with DAWN, a Durable Array of Wimpy Nodes (pdf) -

written by academics at University of California, Santa Cruz and Stanford

University - and published under the auspices of the Storage Systems Research Center

compared the long term cost and reliability of solid state archival storage and

traditional media - such as tape.

over 100 Petabytes of SandForce Driven SSDs in 1 year

In

February 2011

-

SandForce

announced it had shipped more than one million of its

SF-1500 and SF-1200 SSD

Processors since they were released into production in 2010.

SandForce

Driven SSD manufacturers shipped more than 100 Petabytes of NAND flash into

the mainstream computing markets.

Fusion-io shipped 15 Petabytes of SSD accelerators in 1 year

In

January 2011

- Fusion-io

announced

that in the past 12 months it had shipped more than 15 petabytes of its

enterprise flash SSD accelerators.

The company said that more than

2,000 end users have chosen to architect their enterprise infrastructure

upgrades with Fusion's ioMemory technology, including more than half of the

Fortune 50.

EMC shipped 10 Petabytes of SSDs in 2010

EMC's President and COO,

Pat Gelsinger said the company had shipped 10 petabytes of flash SSD

storage in 2010.

TMS has shipped a Petabyte of enterprise SSDs

In a

webinar (Nov 2010) -

Levi Norman, Director of

Marketing -

Texas Memory Systems

disclosed that the company already has more than a petabyte of its enterprise

SSDs installed and running in customer sites (mostly in banks and telcos). That

was a mixture of RAM SSD and flash.

Skyera founded

In July 2010 - Skyera was founded.

The company was originally called StorCloud - but changed its name later. The

company was founded to develop the technologies needed for petabyte scale bulk

storage SSD systems which would be cheaper than HDD arrays.

NetApp ships a petabyte of flash cache

InJune 2010 -

Network Appliance

disclosed that it had shipped more than a petabyte of flash SSD acceleration

storage since introducing the product 9 months earlier.

Spectra Launches Highest Density Tape Library

March

10, 2009 - Spectra Logic announced the T680 - the first

tape library which stores

a full petabyte of data in a single rack.

It supports 12 full-height

tape drives and 680 tape cartridges. Interface connections include - FC, SCSI or

iSCSI. Throughput is 10.4TB/Hour (compressed ) with LTO-4 drives and media.

EMC Announces Petabyte Disk Storage Array

January 26, 2006

- EMC announced availability of the world's first

storage disk array capable

of scaling to more than one petabyte (1,024 terabytes) of capacity.

The record capacity is made possible through the qualification of Symmetrix

DMX-3 system configurations supporting up to 2,400 disk drives and the

availability of new 500GB low-cost Fibre Channel disk drives.

Unisys Issues "Real-Time Petabyte Challenge"

November

8, 2004 - Unisys announced the "Real-Time Petabyte

Challenge" - a research initiative aimed at developing petabyte-sized

storage that will allow researchers, and businesses to have instant, real-time

access to vast amounts of data at a more affordable price.

"Petabyte

storage is not a new phenomenon," said Peter Karnazes, director of High

Performance Computing at Unisys. "However, what is revolutionary is the

requirement to immediately access large amounts of this data. Within the next 5

years, as businesses strive to comply with information security legislation,

online petabyte storage will become a necessity. To realize this vision,

significant advancement in storage and storage management must be achieved to

dramatically reduce the cost of these storage systems, which would otherwise

fall in the range of $50 to $100 million using today's enterprise-class storage

devices. Unisys goal in this challenge is to develop a highly manageable system

of reliable commodity storage disks at one-tenth the price."

Sony Unveils PetaSite Tape Library

March 24, 2003

- Sony unveiled the PetaSite tape library family with up to 250TB of

native capacity per square meter of floor space, and a total native capacity of

up to 1.2 petabytes (PB).

The new systems, available in June 2003,

will bring high-speed backup and restores to life for enterprises and digital

content providers. Sustained native data transfer rates for the SAIT PetaSite

library will reach up to 2.88GB per second, and a standard file can be restored

in just over one minute.

StorageTek Backs Up a Petabyte of Weather Data

March 20, 2003

- With the help of StorageTek the National Center for Atmospheric

Research (NCAR) has surpassed the one-petabyte mark in its data holdings, which

range from satellite, atmosphere, ocean, and land-use data to depictions of

weather and climate from prehistoric times to the year 2100 and beyond.

At one petabyte, the archive is now more than a thousand times larger

than 1986, when it reached the one-terabyte level.

| | |

.. |

"In modern petabyte

scale-out storage systems the focus must be on the architectural organizations

of the entire system and all related performance dimensions.

The

storage node must be considered the building block of a complex ecosystem where

the interconnection plays a strategic role.

Architecturally the

storage node becomes the equivalent of an HDD in the old storage architecture -

but must provide more complex functions." |

| Emilio Billi,

Founder - A3CUBE in his paper -

Architecting a Massively Parallel

Data Processor Platform for Analytics (pdf) (March 2014) | | |

| . |

|

|

| . |

|

| . |

|

| . |

| |